Pattern-Oriented Innovation (POI)

Pattern-Oriented Innovation (POI) serves as as structured approach to codifying experiental knowledge into reusable, validated patterns that drive accelerated development and innovation. It aligns well with enterprise needs for efficiency, scalability, and reliability while reducing risks associated with trial-and-error approaches.

Core Principles of POI:

Codified Experiential Knowledge – Capture and document best practices, lessons learned, and proven solutions from past projects into a structured pattern library.

Prebuilt Catalog of Patterns – Maintain a repository of reusable design, architecture, and implementation patterns tailored for specific use cases.

Accelerated Development – Enable rapid solution delivery by leveraging established patterns, reducing the need for reinvention.

Scalability & Compliance – Ensure patterns are vetted for compatibility, security, and architectural alignment with enterprise standards.

Innovation through Composition – Foster new ideas by combining existing patterns in novel ways rather than starting from scratch.

Human + Machine Synergy – Use automation where possible, but keep human expertise in the loop to refine and adapt patterns.

Innovation doesn’t have to start from scratch—POI enables enterprises to innovate faster, smarter, and with greater impact.

POI is built on a repository of architecture and design patterns, enabling rapid solution development through composition. Architecture patterns define scalable system structures, while design patterns offer reusable implementation best practices. By combining these building blocks, POI accelerates innovation, ensuring consistency, scalability, and efficiency.

While POI defines the foundational approach, A7i is its tangible realization—an advanced platform that brings POI to life including codified best practices, governance, and automation. Check a7i.

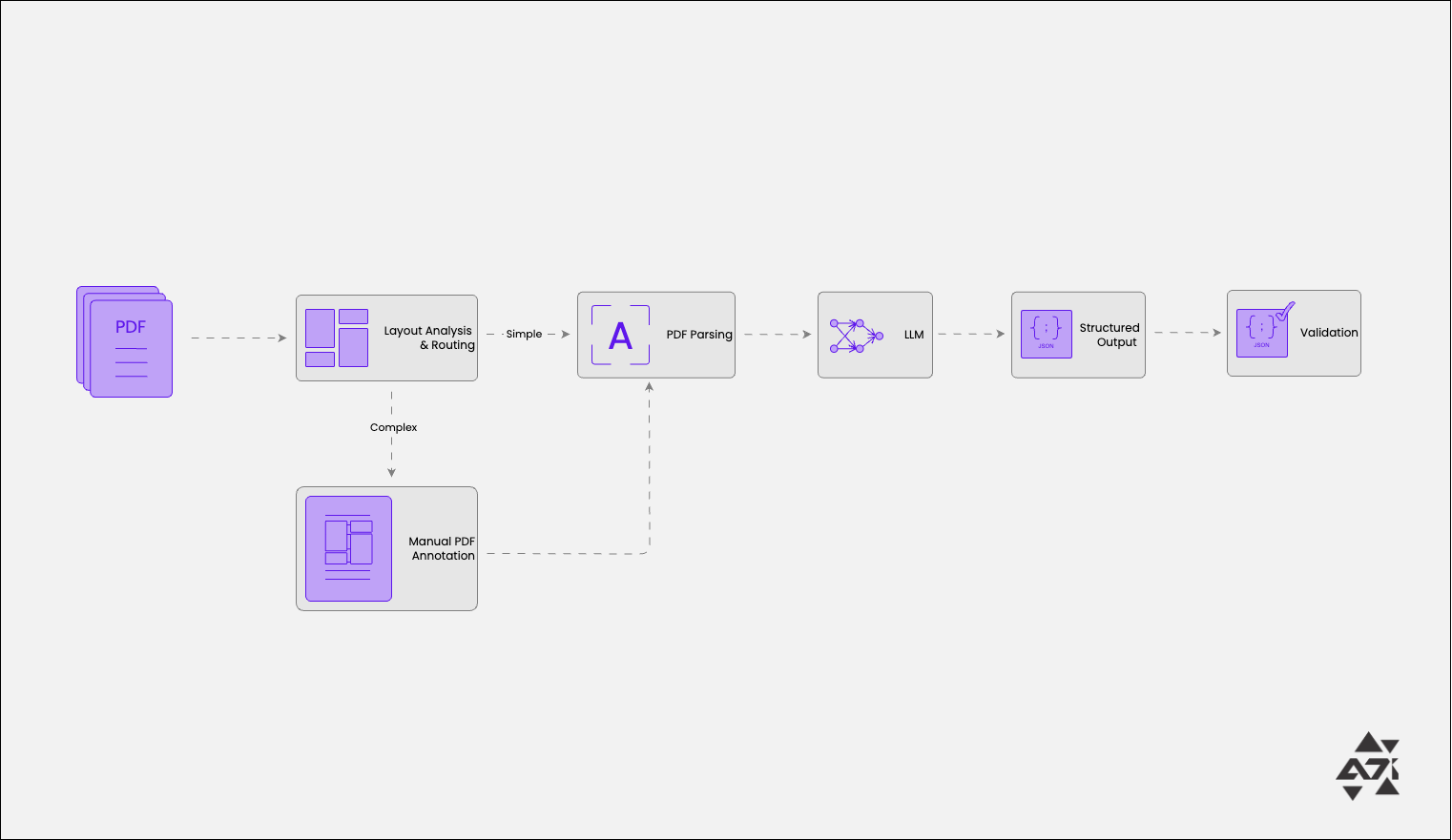

Variable-Layout PDF Processing Pattern

In a world where no two PDFs follow the same structure, current PDF parsers (which internally use AI models for layout detection) often fall short in extracting data accurately in the required reading order. The Variable-Layout PDF Processing Pattern addresses this challenge by introducing a complexity-aware routing mechanism. When dealing with highly inconsistent PDFs—where text may follow multiple reading orders on the same page or be scattered across different sections—the system identifies documents that exceed standard PDF parsing capabilities and routes them for manual annotation. Once annotated, PDF parsers can reliably extract information while maintaining the correct reading order.

This pattern ensures business reliability by balancing AI efficiency with human oversight, making it particularly valuable in domains where accurate data extraction is critical but layouts remain unpredictable. It works best when complex PDFs account for less than 5-10% of the total volume, ensuring scalable automation while keeping edge cases under control.

Where This Applies (Including, But Not Limited To)

HR & Recruitment → Resume Processing: Every candidate’s resume follows a different structure, making automated parsing unreliable without intelligent layout adaptation.

Finance & Accounting → Invoice & Receipt Processing: Different vendors and companies issue invoices in varying formats, requiring adaptive AI parsing with human validation for accuracy.

Architecture Patterns

Pattern Repository

Agentic Document Processing & Insights Automation Pattern

Modern organizations need more than basic data extraction. Agentic Document Processing & Insights Automation Pattern is a step beyond Intelligent Document Processing (IDP) and Retrieval-Augmented Generation (RAG) by orchestrating workflows to bridge document processing with decision-making. Unlike traditional methods, this pattern applies business rules post-extraction, referencing multiple knowledge sources to generate actionable insights, saving time.

Why This Matters

Integrated workflows → Contracts, policies, and forms work together.

Beyond extraction → Insights require validation and transformation.

Business rules ensure accuracy → Context-aware processing enhances precision.

System coordination → Parsers, retrievers, and engines collaborate for insights.

Where This Applies (Including, But Not Limited To)

Contract Review → Extracts clauses, checks compliance, and suggests actions.

Patient Case Summaries → Groups medical records for clinical insights.

Invoice Processing → Verifies pricing and optimizes payments.

Auto Insurance Claims → Structures claims data for better processing.

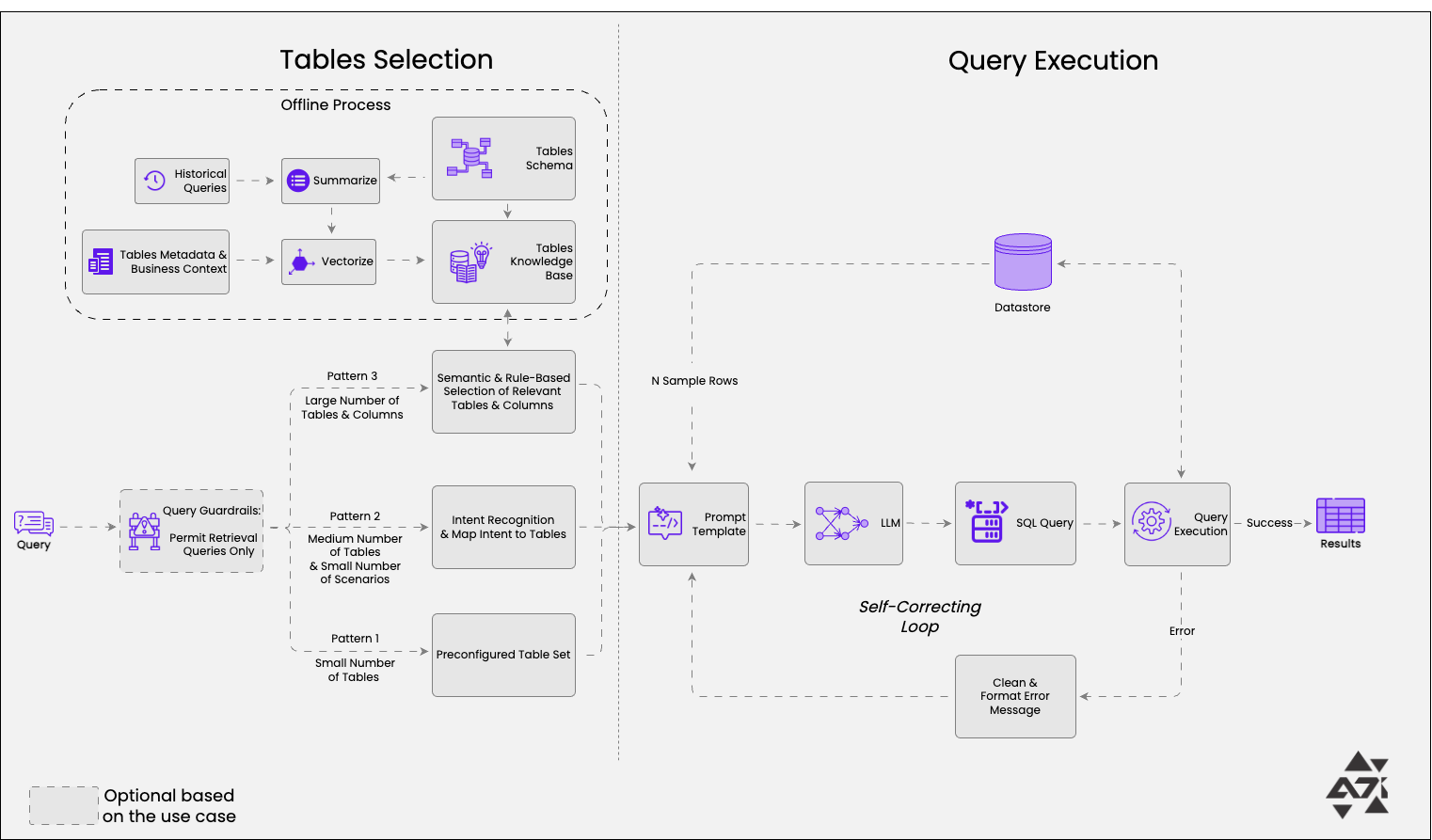

Text to Query Language Pattern

In enterprises, valuable data is often locked within structured data stores, databases, and data lakes, requiring complex queries to extract meaningful insights. The Text to Query Language Pattern bridges this gap by enabling users to query structured data using natural language, eliminating the need for manual query formulation and improving productivity.

Instead of relying on users to craft complex SQL, GraphQL, or other query languages, this pattern automatically translates natural language inputs into the required query format. This approach ensures seamless interaction with structured datasets, making insights more accessible across technical and non-technical teams.

Why This Matters

Brings RAG to structured data → Enables retrieval from databases and data lakes, not just unstructured text.

Enhances productivity → Users can ask questions in plain language instead of writing complex queries.

Works across any query language → Adapts to SQL, NoSQL, GraphQL, and other database query formats.

Democratizes data access → Empowers non-technical users to extract insights without deep database expertise.

Where This Applies (Including, But Not Limited To)

Enterprise Analytics → Teams can query financial, operational, or sales data without SQL knowledge.

Customer Support → Agents retrieve customer history from relational databases using natural language.

Healthcare Records → Doctors access patient data stored in structured formats without manual querying.

Multi-Source RAG Pattern

Enterprises rely on diverse knowledge sources—databases, document repositories, APIs, and more—to provide comprehensive answers. The Multi-Source RAG Pattern is designed to handle retrieval across multiple knowledge sources, ensuring that responses are well-augmented, contextually relevant, and backed by the right data.

Each knowledge source may have its own ingestion and indexing strategy, requiring a robust orchestration layer to manage retrieval, augmentation, and context fusion before passing data to an LLM. This pattern is especially valuable in enterprise environments where information is fragmented across multiple structured and unstructured data sources.

Why This Matters

Aggregates insights across multiple sources → Ensures responses are well-rounded and contextually complete.

Handles different ingestion/indexing mechanisms → Adapts to structured (databases) and unstructured (documents, APIs) data.

Essential for enterprise applications → Organizations often rely on multiple internal and external knowledge bases.

Where This Applies (Including, But Not Limited To)

Enterprise Knowledge Assistants → Pulls insights from internal wikis, customer support logs, and compliance documents.

Financial Research & Analysis → Integrates market data, regulatory updates, and company reports for precise insights.

Legal & Compliance Queries → Retrieves information from case law databases, corporate policies, and contracts.

Healthcare & Medical Insights → Combines clinical guidelines, research papers, and patient records for informed decision-making.

Responsible & Secure RAG Pattern

As AI adoption accelerates, ensuring responsibility, security, and compliance in Retrieval-Augmented Generation (RAG) systems becomes critical. The Responsible & Secure RAG Pattern is designed to safeguard enterprise data, mitigate AI risks, and ensure regulatory compliance, making AI applications more trustworthy and resilient.

AI systems are inherently non-deterministic and susceptible to manipulation. This pattern introduces governance controls, data security layers, and privacy-preserving techniques to prevent unauthorized access, enforce compliance, and reduce AI bias. It is especially valuable for industries governed by strict regulatory frameworks where data integrity and security are non-negotiable.

Why This Matters

Prevents AI manipulation & biases → Ensures fact-based, responsible AI responses.

Safeguards enterprise data → Implements encryption, access controls, and data masking.

Ensures regulatory compliance → Aligns with GDPR, HIPAA, SOC 2, and other industry standards.

Builds trust in AI adoption → Enables enterprises to deploy AI responsibly with transparency.

Where This Applies (Including, But Not Limited To)

Finance & Banking → Ensures secure retrieval of sensitive financial records while preventing AI hallucinations.

Healthcare & Life Sciences → Protects patient data and aligns AI-driven insights with HIPAA compliance.

Legal & Compliance → Ensures legal document processing maintains confidentiality and accuracy.

Enterprise AI Assistants → Applies role-based access controls to prevent unauthorized data exposure.

Design Patterns

Chunk Storage Patterns

-

Large documents can overwhelm retrieval by either losing context with small chunks or including irrelevant details with large ones. The Hierarchical Index pattern solves this by structuring text into multi-level chunks, ensuring efficient and context-aware retrieval. Starting with high-level summaries and drilling into finer details as needed, it reduces costs, improves coherence, and adapts to query scope. Without it, retrieval risks inefficiency, missing key connections, or overwhelming the model with excess data.

-

Complex data often has relationships beyond mere topical overlap. The Graph Index pattern addresses this by mapping knowledge (chunks) as a web of interconnected nodes—capturing cause-effect, hierarchical, and lateral links. This allows advanced relationship-based queries, improving retrieval precision and context awareness. Systems may retrieve irrelevant chunks or miss crucial details hidden in deeper semantic relationships without this approach.

Evaluation Patterns

-

Evaluating structured outputs demands more than a simple true/false check. The Structured Output Evaluation pattern tackles the hidden challenges of diverse data types, nested elements, and arrays, providing systematic ways to measure accuracy with metrics like MSE, F1, or BLEU. By converting comparisons into numerical scores, it pinpoints weaknesses you might otherwise overlook, ensuring robust, actionable insights for your AI’s structured outputs.

-

Evaluating AI-generated outputs is often subjective, inconsistent, and costly. The Formula As Judge pattern replaces manual/LLM based evaluation with structured, mathematical metrics, ensuring objective, repeatable, and scalable assessments. By using predefined formulas—like BLEU for translations or ROUGE for summaries—this approach automates quality checks, reduces bias, and streamlines benchmarking. Ideal for structured tasks, it provides clear, quantitative insights into model performance without the need for human intervention.

-

The Model As Judge pattern automates the evaluation of subjective AI outputs, using an LLM to assess coherence, factual accuracy, and relevance. It scales efficiently, reducing reliance on human reviewers while maintaining flexibility in assessing open-ended tasks. While effective for nuanced evaluations, it may introduce biases or hallucinations, requiring periodic benchmarking against human judgments for reliability.

-

The Human As Judge pattern ensures high-quality evaluation for AI-generated content by leveraging human expertise in areas where automated assessments fall short. It captures subjective factors like ethics, creativity, contextual accuracy, and domain expertise, making it essential for high-stakes applications. While it provides rich insights and expert validation, its scalability is limited, and it requires careful structuring to mitigate human bias and maintain consistency.

-

The User As Judge pattern integrates direct user feedback into AI evaluation, ensuring alignment with real-world needs and personal preferences. It enables continuous learning, improves engagement, and refines AI models and AI-based systems through explicit ratings and implicit behavior tracking. While highly scalable for consumer applications, it requires careful bias filtering and structured aggregation to ensure meaningful insights drive AI improvements.

Extraction Patterns

-

Large documents can disrupt an LLM’s in-context recall, making data extraction prone to errors or unpredictable results. The Long Document Structured Extraction pattern addresses this challenge by segmenting text, defining a clear output format, and optimizing prompt design. This approach enhances accuracy, reliability, and scalability—even for complex or visual-first formats—ensuring consistent, structured outcomes from your AI systems.

Chunking Patterns

-

Dividing large text into fixed chunks can disrupt comprehension by splitting sentences or concepts, leading to poor retrieval quality. The Sliding Window pattern mitigates this by overlapping chunks, ensuring continuity and preserving critical context. While it increases redundancy and retrieval costs, it improves accuracy by preventing lost information. Without it, naive chunking risks fragmenting key insights, reducing model effectiveness in RAG applications.

-

Retrieving relevant information in large-scale RAG applications can be inefficient if all chunks are treated equally. The Metadata Attachment pattern assigns structured attributes (e.g., author, date, category) to chunks, enabling precise filtering before retrieval. This reduces search space, improves accuracy, and cuts computational costs. Without it, retrieval accuracy decreases and potentially leads to a lot of noisy data when it is given to the LLM.

-

The Small-to-Big pattern balances precise retrieval with rich contextual synthesis in RAG applications. Small chunks ensure high-accuracy search, while larger contextual chunks provide depth during response generation. Without it, retrieval may either introduce excessive noise from large chunks or lose critical context with overly small chunks, leading to incomplete or misleading outputs. This pattern optimizes both precision and completeness in AI-driven knowledge retrieval.

Reliability Patterns

-

Complex or ethically sensitive AI decisions can pose huge risks if left ungoverned. The Human In The Loop pattern addresses this by ensuring a human reviews and approves high-stakes AI recommendations. This fosters accountability, compliance, and trust—while capturing user inputs to refine the model. It’s essential when mistakes could lead to severe repercussions or require expert judgment, bridging the gap between AI efficiency and human oversight.

-

High-autonomy AI systems can produce unnoticed errors or drift over time. The Human On The Loop pattern keeps human supervisors in the background, ready to intervene if issues arise. It balances AI efficiency with oversight—automating routine decisions while escalating uncertain or high-impact cases. Humans then update rules and models, preventing failures and maintaining trust without blocking everyday operations.

-

If your AI system can act autonomously but you still want to refine or confirm decisions after execution, the Human After the Loop approach ensures you won’t miss hidden oversights. By verifying AI decisions post-action, you harness the speed of automation while upholding human expertise. This helps maintain control and reliability, even in tasks you might not expect to need final checks on.

Query Optimization Patterns

-

User queries are often vague, overly broad, or missing key details, leading to incomplete retrieval. The Query Expansion pattern enhances search accuracy by generating multiple reformulated queries—capturing synonyms, subtopics, and alternative phrasings. This improves retrieval precision, ensuring relevant results even when queries are ambiguous or domain-specific. Without it, crucial context may be lost, making AI responses less reliable.

-

User queries are often ambiguous, overly complex, or poorly structured, leading to inaccurate retrieval in RAG systems. The Query Transformation pattern refines user input—rewriting queries, generating hypothetical documents, or using step-back prompting—to improve retrieval precision. This ensures that relevant information isn’t lost due to phrasing mismatches, making AI systems more resilient to diverse query styles and specialized domain language.

-

Relying solely on natural language queries in RAG systems can lead to inefficient, inaccurate, or incomplete retrieval—especially when structured data sources like SQL or graph databases are involved. The Query Construction pattern translates user queries into optimized structured queries (e.g., SQL, Cypher), ensuring precise, efficient data access. Without it, retrieval remains limited to text search, missing critical structured insights

Retrieval Patterns

-

Hybrid retrieval merges the strengths of sparse and dense retrieval, capturing rare keywords while handling semantic nuances. This pattern ensures robust coverage for specialized domains or complex queries. By fusing both methods and reranking results, you avoid mistakes from single-mode retrieval—like missing critical context or focusing only on exact matches. You might not expect to need it—until your standard retriever starts overlooking vital information.

-

General-purpose retrievers can falter with domain-specific jargon and structures, returning irrelevant or incomplete results. This pattern fine-tunes your retriever to specialized data, ensuring higher precision, fewer misunderstandings, and a more reliable foundation for your LLM.

Post-Retrieval Patterns

-

Retrieving multiple chunks in RAG can overwhelm the model, leading to irrelevant, contradictory, or missed key information. Reranking prioritizes the most relevant and useful chunks, ensuring that the highest-quality context is used within token limits. Without it, critical insights may be buried, noise can degrade responses, and efficiency suffers. This pattern refines retrieval, optimizing accuracy, cost, and performance.

-

Retrieving too much text in RAG systems can overwhelm the model, increasing costs, slowing responses, and degrading accuracy. The Compression pattern reduces token usage while preserving essential information by either filtering redundant details or using a smaller model to condense content. Without it, excessive context can dilute the model’s focus, leading to inefficient retrieval and lower-quality responses.

RAG Orchestration Patterns

-

Not all queries are the same—some require facts, others creativity, and some demand specialized expertise. A one-size-fits-all approach leads to inefficiencies, high costs, and poor results. The Routing pattern intelligently directs each query to the most suitable module, ensuring optimal accuracy, faster responses, and resource efficiency. It keeps systems modular and scalable, preventing unnecessary processing while delivering the right answer through the right path.

-

Complex queries often require multiple retrievals, refinements, and validations before reaching a reliable answer. Without a structured iteration process, systems risk either premature conclusions or excessive loops that waste resources. The Controlled Iteration pattern ensures each step—generation, assessment, and retrieval—is carefully orchestrated, leading to accurate, efficient, and explainable results. It balances quality with cost, preventing both under-explored answers and unnecessary processing.

-

Relying on a single retrieval source can lead to incomplete, biased, or shallow responses. The Fusion pattern intelligently merges outputs from multiple retrieval or generation pipelines, ensuring richer, more comprehensive answers. By aggregating diverse perspectives—whether from different models, data sources, or domains—this pattern enhances accuracy, robustness, and flexibility. It prevents critical information gaps while maintaining efficiency, making it ideal for complex queries that demand a well-rounded response.

Flow Engineering Patterns

-

The Linear pattern structures a system into a clear, step-by-step pipeline ensuring modularity, simplicity, and ease of debugging. It prevents unnecessary complexity, making evaluation and upgrades straightforward. While effective for structured workflows, it may be limiting for adaptive or iterative processes that require continuous adjustments.

-

Dynamic decision-making within a GenAI system ensures that different components—retrieval, processing, or generation—adapt based on context, resource constraints, or task complexity. Without this, rigid workflows can lead to inefficiencies, misallocation of resources, or suboptimal outputs. The Conditional Pattern enables adaptive execution by selectively routing tasks, optimizing accuracy, efficiency, and compliance across various stages of the system.

-

Branching enables a GenAI system to explore multiple paths in parallel—whether through diverse retrievals, alternative generations, or different reasoning methods—before consolidating results. Without this, responses may be constrained by a single execution path, limiting depth, diversity, and robustness. This pattern ensures richer, more comprehensive outputs, making it particularly valuable for complex queries, creative tasks, and uncertainty handling.

-

Looping enables a GenAI system to iteratively refine retrieval and generation, adapting responses based on intermediate results. This pattern ensures queries are progressively clarified, knowledge gaps are addressed, and responses improve over multiple iterations. Without it, complex or ambiguous queries risk premature or incomplete answers, limiting depth and accuracy in the system’s outputs.